The Silence Of Virtualization

This week my home network witnessed the passing of an era. For over 10 years now, I’ve been running a variety of Linux -based servers at home mainly as development boxes, with a bit of personal web and mail hosting on the side. It made a lot of sense to do this, as old hardware could easily be recycled into a useful second life running a light weight linux distro.

But this morning I finally switched the last one off, and a slightly eerie silence descended on my home office. My new laptop, with the help of VMWare, is more than capable of wearing all the software development hats, and I’ve jettisoned all local email hosting because Google can do it better.

But this morning I finally switched the last one off, and a slightly eerie silence descended on my home office. My new laptop, with the help of VMWare, is more than capable of wearing all the software development hats, and I’ve jettisoned all local email hosting because Google can do it better.

VMWare is the facilitator – I’ve always preferred to use Windows on my desktop, and use GNU/Linux in the server environment. Virtualization makes it extremely easy to have all the operating systems you need on a single machine.

Quiet – and Greener

Compared to my desktop, my laptop is whisper quiet. That’s a nice bonus – No more buzzing and whirring 24×7, something I was so accustomed to that I didn’t even notice it until it ceased to exist.

One of the most obvious advantages is the electricity savings – something that in these intervening 10 years has moved from being a non-issue, to being at the forefront of server management and highly visible everywhere in the face of carbon emissions – and the reduction thereof.

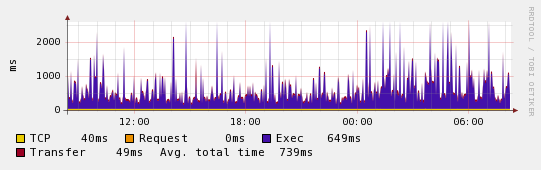

So it feels good to have just one PC at home – a laptop – that gets switched off when not in use. It’s an improvement, although my green credentials still need work given the redundancy built into our uptime monitoring network.

The other obvious advantage is having full access to my development environment whilst I’m on the road. I have quite a number of travel comittments this year, so I expect to make good use of that capability.

The final benefit is the ease of producing backups. The full virtual machine sits in a tidy 8GB disk image, and a backup snapshot becomes as simple as shutting the VM down and copying the file that contains it.

Fewer Points of Failure

Considering backups raises an interesting point – the less hardware that is in use, the smaller your chance of hardware failure. Consider how much time it takes you to rebuild a server even if you had a great backup regime, and virtual servers become much more appealing. If my host laptop fails, getting my servers back up should be as simple of copying a handful of files and reinstalling VMWare.

What will the tomorrow’s data centers hold? One enormously powerful grid computing appliance, containing 1000s of virtual servers? The idea of combining hundreds of physical servers into a single computer, to then run hundreds of virtual servers on it does seem a little strange, I’ll admit.

Update: Further reading on this concept at SitePoint.